INTERACTIVE/REAL-TIME DIGITAL MULTIMEDIA SYSTEMS

Interactive Multimedia Performance Environment for Unless (2009)

I designed the interactive multimedia performance environment of Unless (2009) to respond to the dancer’s physical gestures by introducing connections between certain aspects of the movement and the media. The environment included two interactive music systems and one graphics system. The physical interface for the interactive systems consisted of two Nintendo Wiimote game controllers (one held in each hand by the performer). They were used to detect the motion of the dancer utilizing their built-in accelerometer technology. The pitch, roll and overall acceleration data from the accelerometers proved to be the most useful for expressivity. In aviation or nautical terms, when a vessel pitches, the nose moves up or down, and when it rolls, the nose will tip left or right. Those movements were interesting for Emily to explore in relation to the interactive music systems.

Physical Rehabilitation with Interactive Audio-Visual System

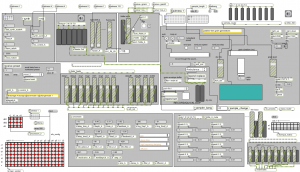

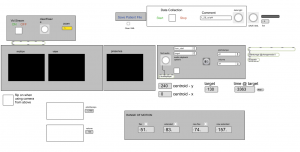

Through collaboration with Dr. Amir Lahav and Harvard Medical School, I led software development on a computer-vision based interactive music therapy program used for facilitating recovery processes in patients with physical disabilities. Essentially, the software has the ability to analyze the movement of the patient while they perform various rehabilitation exercises. In turn, the system outputs visual, sound and music cues that both provide feedback on performance and motivate continued progress. The program also features a real-time data collection component aiding the scientific research. Pilot study results were very promising.

*custom software designed by Brian Knoth with soft vns (by David Rokeby) and Max/MSP/Jitter

Real-Time Audio Control of 3D Graphics

The Open GL Morpher takes advantage of Jitter’s user-friendly access to the Open GL API in order to manipulate 3D models (.obj files) with audio data in real-time. Behind the scenes, an FFT process analyzes the audio stream (either live input or a sound file) and extracts useful information for morphing the vertices of the 3D model (represented in matrix form).

Interface design by Ed Guild (www.vizzie.com)